It’s mid-February in Toronto: -10 °C and snowy. The memory of chirping summer fields is dim. But in my heart there is always a cricket-loud meadow.

Short of moving somewhere warmer, I’m going to have to make my own midwinter crickets. I have micro-controllers and tiny speakers: how hard can this be?

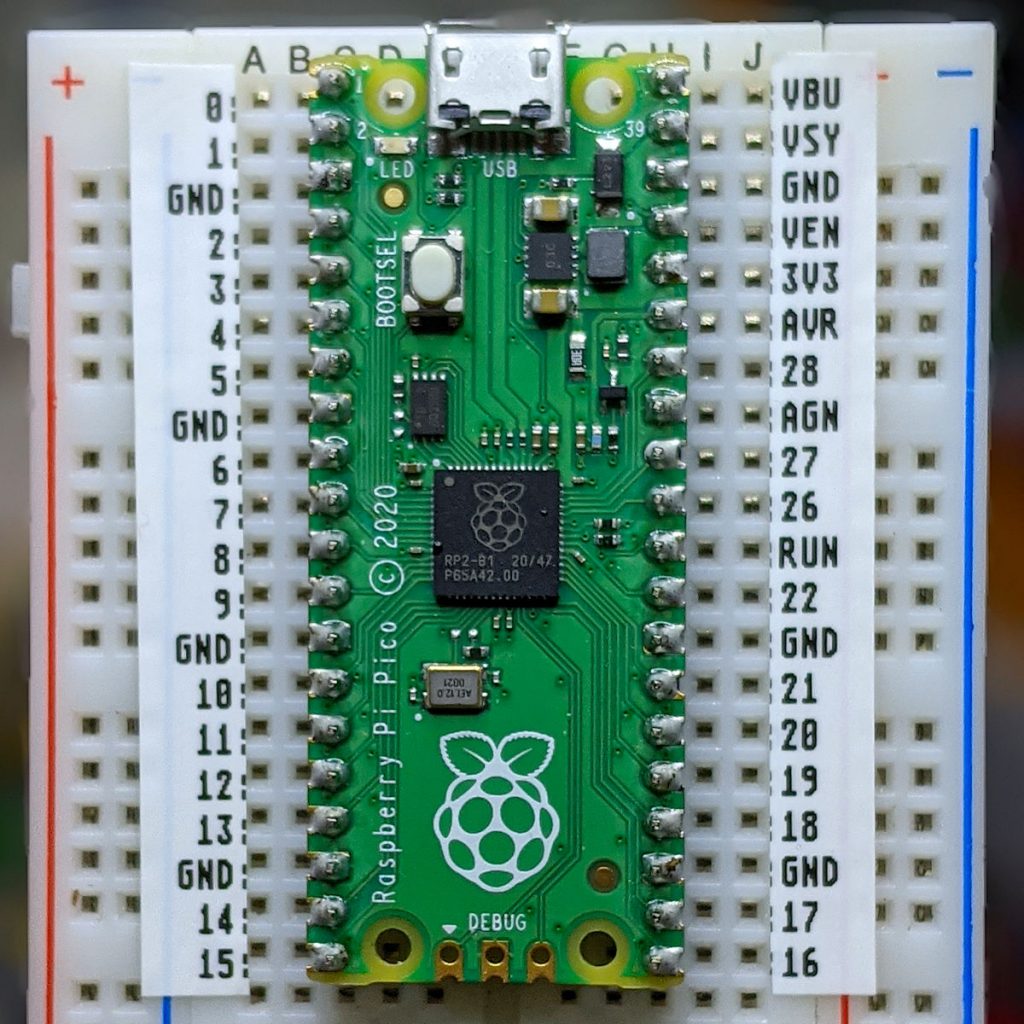

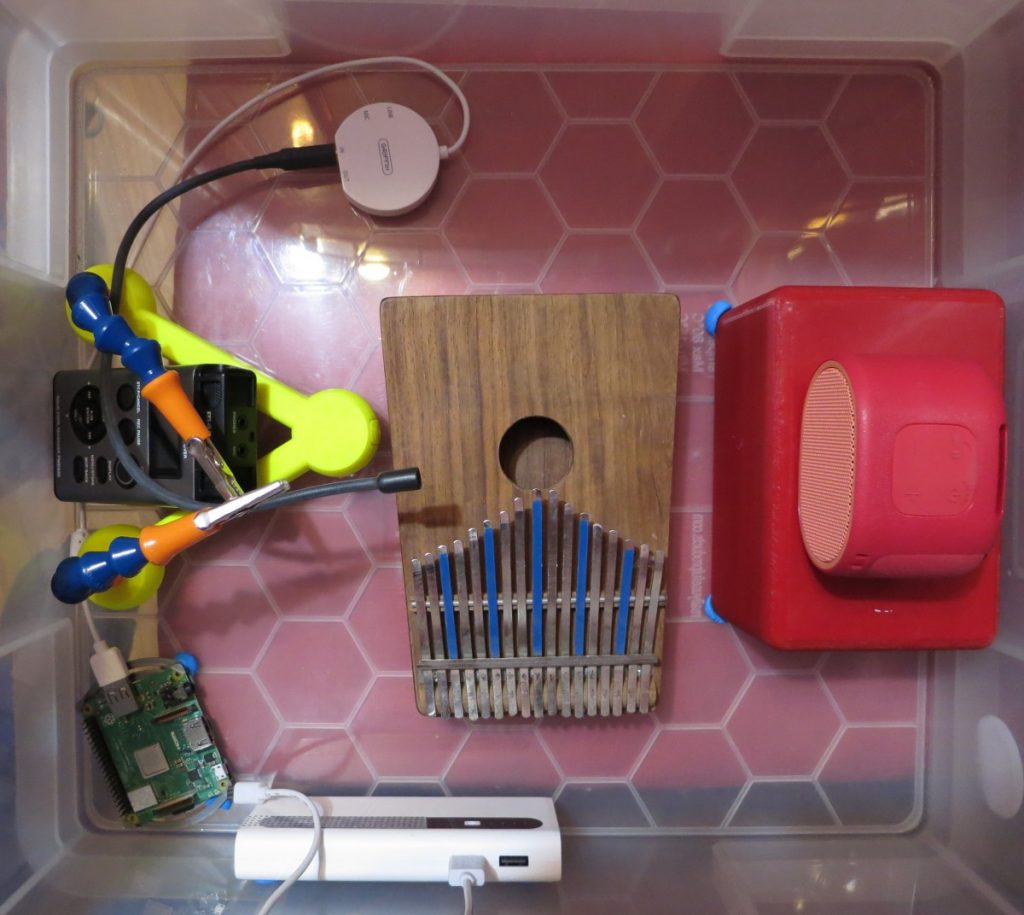

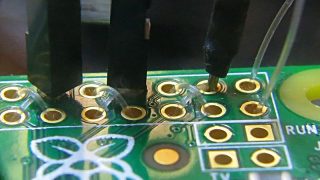

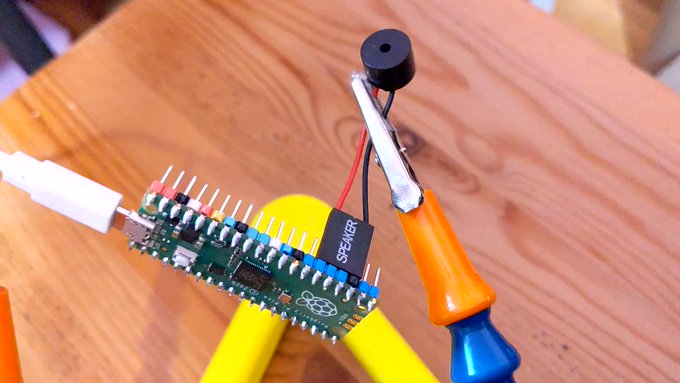

(video description: a plastic box containing three USB power banks, each with USB cable leading to a Raspberry Pi Pico board. Each board has a small electromagnetic speaker attached between ground and a data pin)

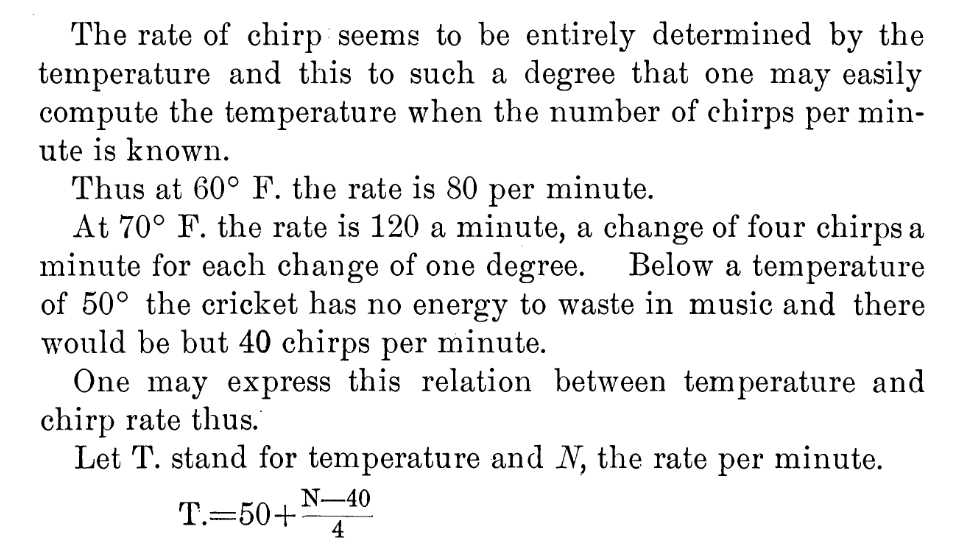

I could have merely made these beep away at a fixed rate, but I know that real crickets tend to chirp faster as the day grows warmer. This relationship is frequently referred to as Dolbear’s law. The American inventor Amos Dolbear published his observation (without data or species identification) in The American Naturalist in 1897: The Cricket as a Thermometer —

When emulating crickets I’m less interested in the rate of chirps per minute, but rather in the period between chirps. I could also care entirely less about barbarian units, so I reformulated it in °C (t) and milliseconds (p):

t = ⅑ × (40 + 75000 ÷ p)

Since I know that the micro-controller has an internal temperature sensor, I’m particularly interested in the inverse relationship:

p = 15000 ÷ (9 * t ÷ 5 – 8)

I can check this against one of Dolbear’s observations for 70°F (= 21⅑ °C, or 190/9) and 120 chirps / minute (= 2 Hz, or a period of 500 ms):

p = 15000 ÷ (9 * t ÷ 5 – 8)

= 15000 ÷ (9 * (190 ÷ 9) ÷ 5 – 8)

= 15000 ÷ (190 ÷ 5 – 8)

= 15000 ÷ 30

= 500

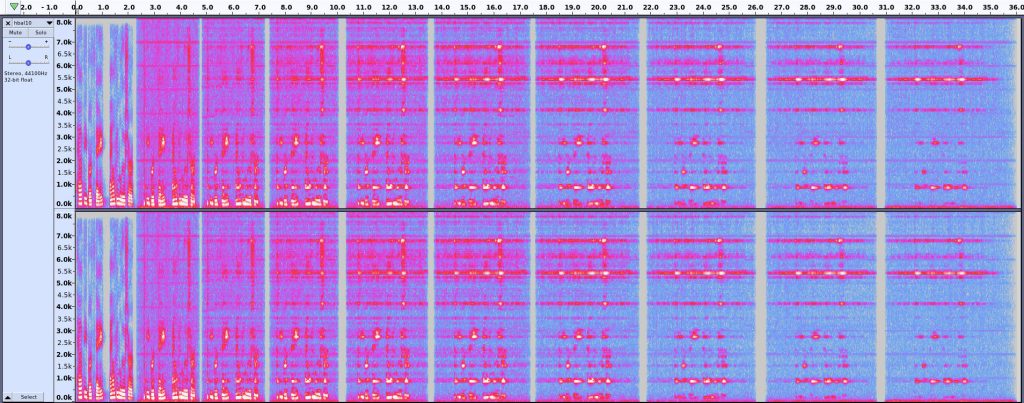

Now I’ve got the timing worked out, how about the chirp sound. From a couple of recordings of cricket meadows I’ve made over the years, I observed:

- The total duration of a chirp is about ⅛ s

- A chirp is made up of four distinct events:

- a quieter short tone;

- a longer louder tone of a fractionally higher pitch;

- the same longer louder tone repeated;

- the first short tone repeated

- There is a very short silence between each tone

- Each cricket appears to chirp at roughly the same pitch: some slightly lower, some slightly higher

- The pitch of the tones is in the range 4500–5000 Hz: around D8 on the music scale

I didn’t attempt to model the actual stridulating mechanism of a particular species of cricket. I made what sounded sort of right to me. Hey, if Amos Dolbear could make stuff up and get it accepted as a “law”, I can at least get away with pulse width modulation and tiny tinny speakers …

This is the profile I came up with:

- 21 ms of 4568 Hz at 25% duty cycle

- 7 ms of silence

- 28 ms of 4824 Hz at 50% duty cycle

- 7 ms of silence

- 28 ms of 4824 Hz at 50% duty cycle

- 7 ms of silence

- 21 ms of 4568 Hz at 25% duty cycle

- 7 ms of silence

That’s a total of 126 ms, or ⅛ish seconds. In the code I made each instance play at a randomly-selected relative pitch of ±200 Hz on the above numbers.

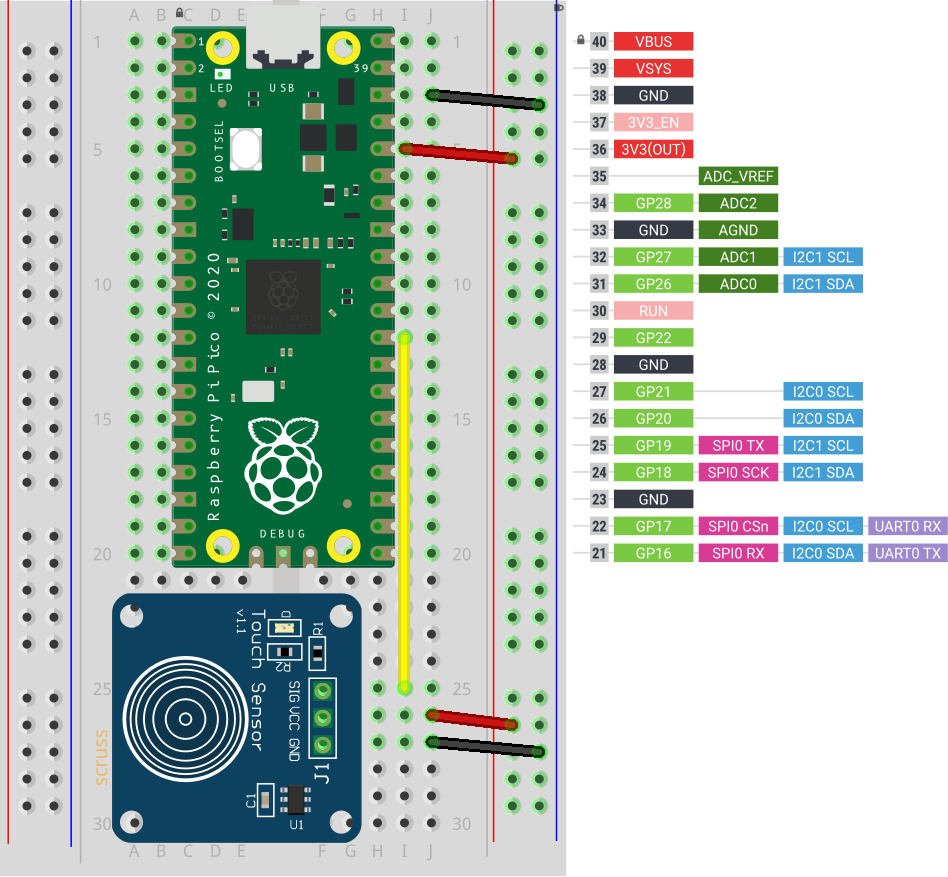

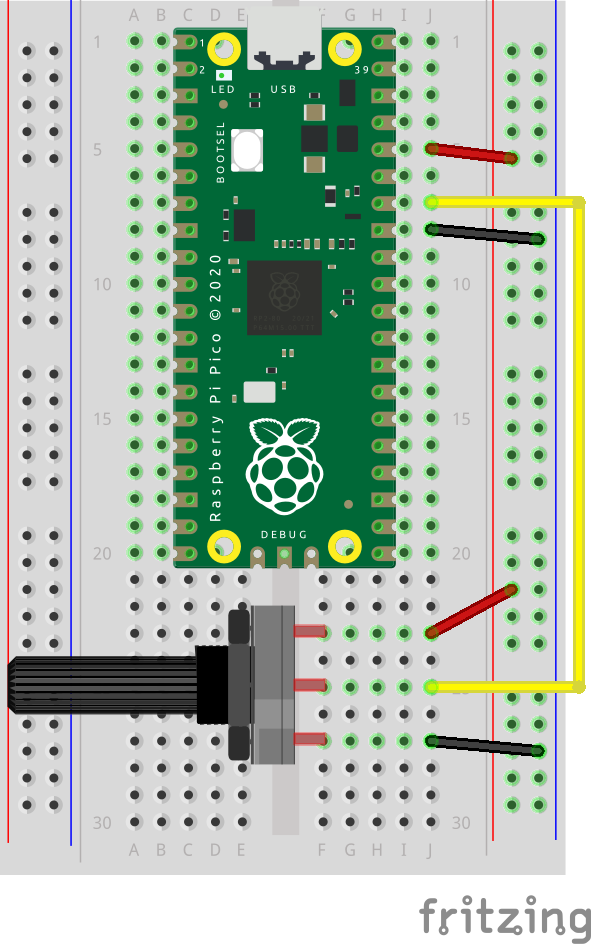

For the speaker, I have a bunch of cheap PC motherboard beepers. They have a Dupont header that spans four pins on a Raspberry Pi Pico header, so if you put one on the ground pin at pin 23, the output will be connected to pin 26, aka GPIO 20:

So — finally — here’s the MicroPython code:

# cricket thermometer simulator - scruss, 2024-02

# uses a buzzer on GPIO 20 to make cricket(ish) noises

# MicroPython - for Raspberry Pi Pico

# -*- coding: utf-8 -*-

from machine import Pin, PWM, ADC, freq

from time import sleep_ms, ticks_ms, ticks_diff

from random import seed, randrange

freq(125000000) # use default CPU freq

seed() # start with a truly random seed

pwm_out = PWM(Pin(20), freq=10, duty_u16=0) # can't do freq=0

led = Pin("LED", Pin.OUT)

sensor_temp = machine.ADC(4) # adc channel for internal temperature

TOO_COLD = 10.0 # crickets don't chirp below 10 °C (allegedly)

temps = [] # for smoothing out temperature sensor noise

personal_freq_delta = randrange(400) - 199 # different pitch every time

chirp_data = [

# cadence, duty_u16, freq

# there is a cadence=1 silence after each of these

[3, 16384, 4568 + personal_freq_delta],

[4, 32768, 4824 + personal_freq_delta],

[4, 32768, 4824 + personal_freq_delta],

[3, 16384, 4568 + personal_freq_delta],

]

cadence_ms = 7 # length multiplier for playback

def chirp_period_ms(t_c):

# for a given temperature t_c (in °C), returns the

# estimated cricket chirp period in milliseconds.

#

# Based on

# Dolbear, Amos (1897). "The cricket as a thermometer".

# The American Naturalist. 31 (371): 970–971. doi:10.1086/276739

#

# The inverse function is:

# t_c = (75000 / chirp_period_ms + 40) / 9

return int(15000 / (9 * t_c / 5 - 8))

def internal_temperature(temp_adc):

# see pico-micropython-examples / adc / temperature.py

return (

27

- ((temp_adc.read_u16() * (3.3 / (65535))) - 0.706) / 0.001721

)

def chirp(pwm_channel):

for peep in chirp_data:

pwm_channel.freq(peep[2])

pwm_channel.duty_u16(peep[1])

sleep_ms(cadence_ms * peep[0])

# short silence

pwm_channel.duty_u16(0)

pwm_channel.freq(10)

sleep_ms(cadence_ms)

led.value(0) # led off at start; blinks if chirping

### Start: pause a random amount (less than 2 s) before starting

sleep_ms(randrange(2000))

while True:

loop_start_ms = ticks_ms()

sleep_ms(5) # tiny delay to stop the main loop from thrashing

temps.append(internal_temperature(sensor_temp))

if len(temps) > 5:

temps = temps[1:]

avg_temp = sum(temps) / len(temps)

if avg_temp >= TOO_COLD:

led.value(1)

loop_period_ms = chirp_period_ms(avg_temp)

chirp(pwm_out)

led.value(0)

loop_elapsed_ms = ticks_diff(ticks_ms(), loop_start_ms)

sleep_ms(loop_period_ms - loop_elapsed_ms)

There are a few more details in the code that I haven’t covered here:

- The program pauses for a short random time on starting. This is to ensure that if you power up a bunch of these at the same time, they don’t start exactly synchronized

- The Raspberry Pi Pico’s temperature sensor can be slightly noisy, so the chirping frequency is based on the average of (up to) the last five readings

- There’s no chirping below 10 °C, because Amos Dolbear said so

- The built-in LED also flashes if the board is chirping. It doesn’t mimic the speaker’s PWM cadence, though.

Before I show you the next video, I need to say: no real crickets were harmed in the making of this post. I took the bucket outside (roughly -5 °C) and the “crickets” stopped chirping as they cooled down. Don’t worry, they started back up chirping again when I took them inside.

(video description: a plastic box containing three USB power banks, each with USB cable leading to a Raspberry Pi Pico board. Each board has a small electromagnetic speaker attached between ground and a data pin)