NAPLPS — an almost-forgotten videotex vector graphics format with a regrettable pronunciation (/nap-lips/, no really) — was really hard to create. Back in the early days when it was a worthwhile Canadian initiative called Telidon (see Inter/Access’s exhibit Remember Tomorrow: A Telidon Story) it required a custom video workstation costing $$$$$$. It got cheaper by the time the 1990s rolled round, but it was never easy and so interest waned.

I don’t claim what I made is particularly interesting:

but even decoding the tutorial and standards material was hard. NAPLPS made heavy use of bitfields interleaved and packed into 7 and 8-bit characters. It was kind of a clever idea (lower resolution data could be packed into fewer bytes) but the implementation is quite unpleasant.

A few of the references/tools/resources I relied on:

- The NAPLPS: videotex/teletext presentation level protocol syntax standard. Long. Quite dull and abstract, but it is the reference

- The 1983 BYTE Magazine article series NAPLPS: A New Standard for Text and Graphics. Also long and needlessly wordy, with digressions into extensions that were never implemented. Contains a commented byte dump of an image that explains most concepts by example

- Technical specifications for NAPLPS graphics — aka NAPLPS.ASC. A large text file explaining how NAPLPS works. Fairly clear, but the ASCII art diagrams aren’t the most obvious

- TelidonP5 — an online NAPLPS viewer. Not perfect, but helpful for proofing work

- Videotex – NAPLPS Client for the Commodore 64 Archived — a terminal for the C64 that supports (some) NAPLPS. Very limited in the size of file it can view

- John Durno has spent years recovering Telidon / NAPLPS works. He has published many useful resources on the subject

Here’s the fragment of code I wrote to generate the NAPLPS:

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

# draw a disappointing maple leaf in NAPLPS - scruss, 2023-09

# stylized maple leaf polygon, quite similar to

# the coordinates used in the Canadian flag ...

maple = [

[62, 2],

[62, 35],

[94, 31],

[91, 41],

[122, 66],

[113, 70],

[119, 90],

[100, 86],

[97, 96],

[77, 74],

[85, 114],

[73, 108],

[62, 130],

[51, 108],

[39, 114],

[47, 74],

[27, 96],

[24, 86],

[5, 90],

[11, 70],

[2, 66],

[33, 41],

[30, 31],

[62, 35],

]

def colour(r, g, b):

# r, g and b are limited to the range 0-3

return chr(0o74) + chr(

64

+ ((g & 2) << 4)

+ ((r & 2) << 3)

+ ((b & 2) << 2)

+ ((g & 1) << 2)

+ ((r & 1) << 1)

+ (b & 1)

)

def coord(x, y):

# if you stick with 256 x 192 integer coordinates this should be okay

xsign = 0

ysign = 0

if x < 0:

xsign = 1

x = x * -1

x = ((x ^ 255) + 1) & 255

if y < 0:

ysign = 1

y = y * -1

y = ((y ^ 255) + 1) & 255

return (

chr(

64

+ (xsign << 5)

+ ((x & 0xC0) >> 3)

+ (ysign << 2)

+ ((y & 0xC0) >> 6)

)

+ chr(64 + ((x & 0x38)) + ((y & 0x38) >> 3))

+ chr(64 + ((x & 7) << 3) + (y & 7))

)

f = open("maple.nap", "w")

f.write(chr(0x18) + chr(0x1B)) # preamble

f.write(chr(0o16)) # SO: into graphics mode

f.write(colour(0, 0, 0)) # black

f.write(chr(0o40) + chr(0o120)) # clear screen to current colour

f.write(colour(3, 0, 0)) # red

# *** STALK ***

f.write(

chr(0o44) + coord(maple[0][0], maple[0][1])

) # point set absolute

f.write(

chr(0o51)

+ coord(maple[1][0] - maple[0][0], maple[1][1] - maple[0][1])

) # line relative

# *** LEAF ***

f.write(

chr(0o67) + coord(maple[1][0], maple[1][1])

) # set polygon filled

# append all the relative leaf vertices

for i in range(2, len(maple)):

f.write(

coord(

maple[i][0] - maple[i - 1][0], maple[i][1] - maple[i - 1][1]

)

)

f.write(chr(0x0F) + chr(0x1A)) # postamble

f.close()

There are a couple of perhaps useful routines in there:

colour(r, g, b)spits out the code for two bits per component RGB. Inputs are limited to the range 0–3 without error checkingcoord(x, y)converts integer coordinates to a NAPLPS output stream. Best limited to a 256 × 192 size. Will also work with positive/negative relative coordinates.

Here’s the generated file:

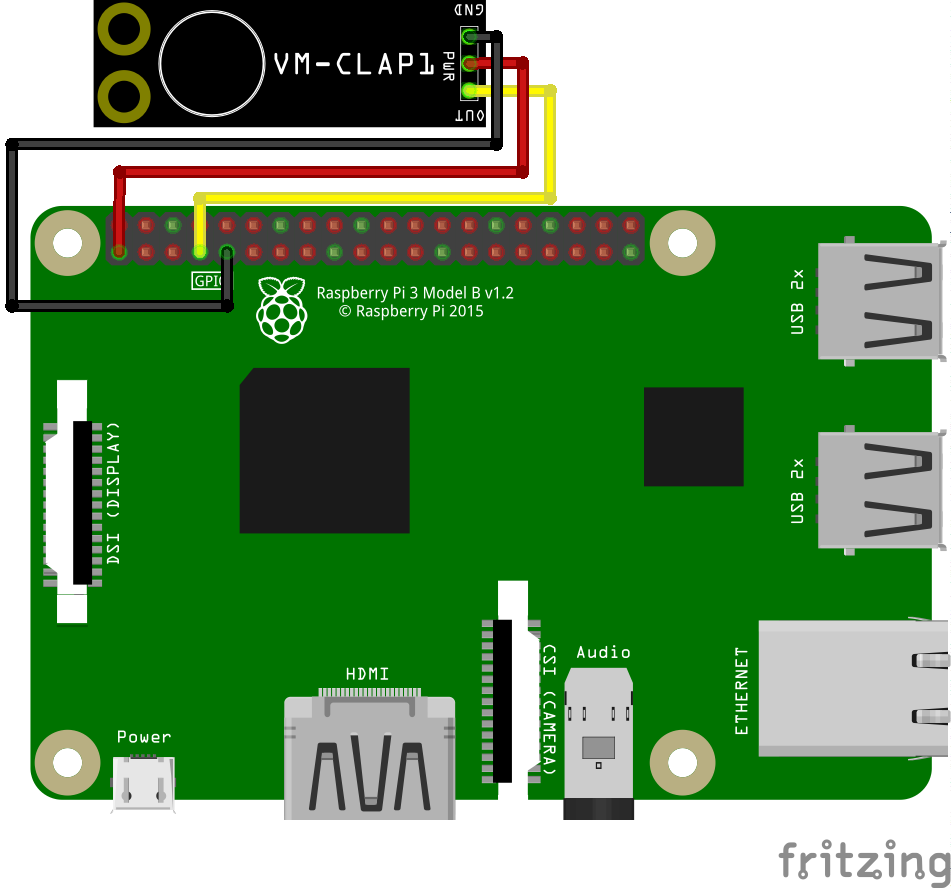

Phil sent me a note last week asking how to turn an LED on or off using Python talking through Firmata to an Arduino. This was harder than it looked.

Phil sent me a note last week asking how to turn an LED on or off using Python talking through Firmata to an Arduino. This was harder than it looked.