Stuff I found out setting this up:

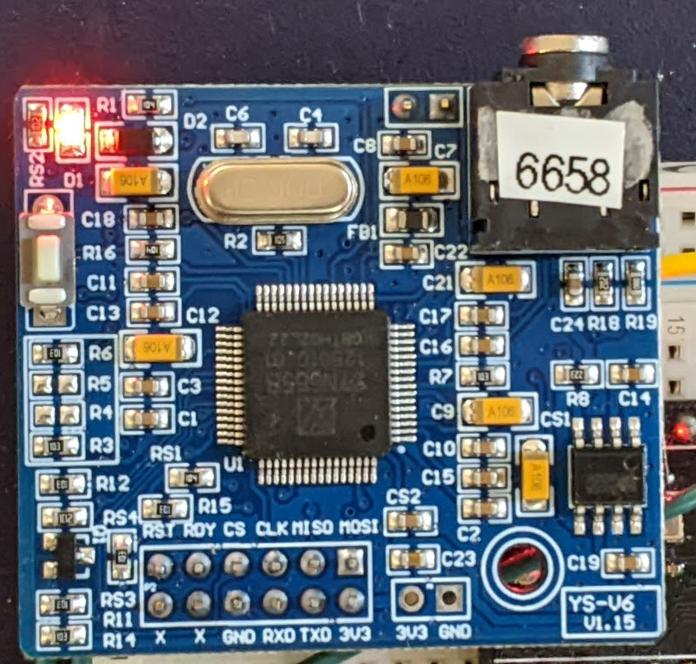

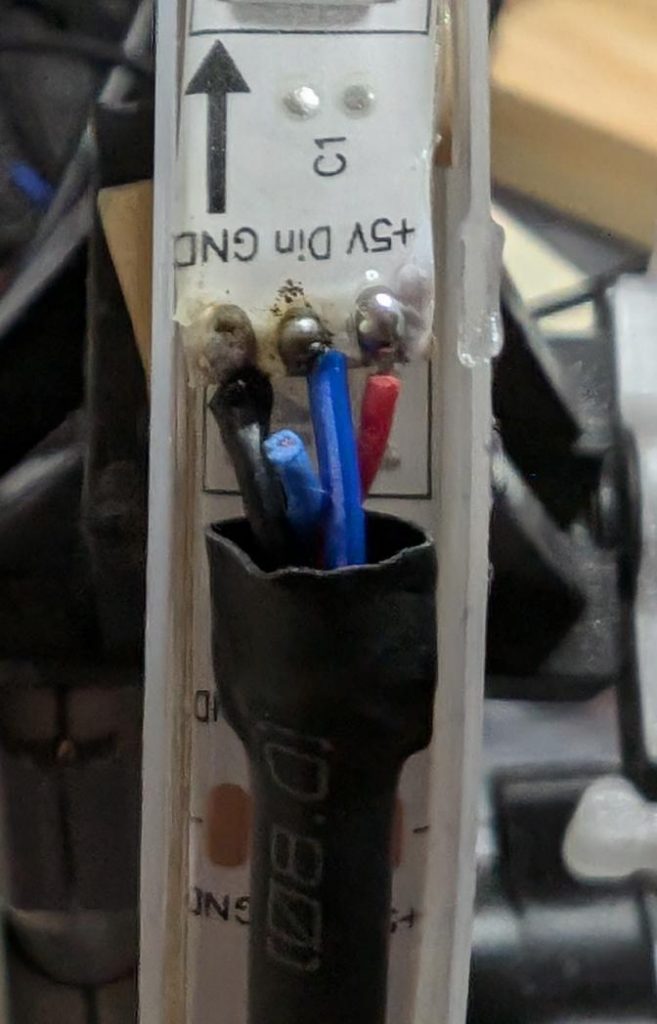

- some old OLEDs, like these surplus pulse oximeter ones, don’t have pull-up resistors on their data lines. These I’ve carefully hidden behind the displays, but they’re there.

- Some MicroPython ports don’t include the complex type, so I had to lose the elegant z→z²+C mapping to some ugly code.

- Some MicroPython ports don’t have os.uname(), but sys.implementation seems to cover most of the data I need.

- On some boards, machine.freq() is an integer value representing the CPU frequency. On others, it’s a list. Aargh.

These displays came from the collection of the late Tom Luff, a Toronto maker who passed away late 2024 after a long illness. Tom had a huge component collection, and my way of remembering him is to show off his stuff being used.

Source:

# benchmark Mandelbrot set (aka Brooks-Matelski set) on OLED

# scruss, 2025-01

# MicroPython

# -*- coding: utf-8 -*-

from machine import Pin, I2C, idle, reset, freq

# from os import uname

from sys import implementation

from ssd1306 import SSD1306_I2C

from time import ticks_ms, ticks_diff

# %%% These are the only things you should edit %%%

startpin = 16 # pin for trigger configured with external pulldown

# I2C connection for display

i2c = machine.I2C(1, freq=400000, scl=19, sda=18, timeout=50000)

# %%% Stop editing here - I mean it!!!1! %%%

# maps value between istart..istop to range ostart..ostop

def valmap(value, istart, istop, ostart, ostop):

return ostart + (ostop - ostart) * (

(value - istart) / (istop - istart)

)

WIDTH = 128

HEIGHT = 64

TEXTSIZE = 8 # 16x8 text chars

maxit = 120 # DO NOT CHANGE!

# value of 120 gives roughly 10 second run time for Pico 2W

# get some information about the board

# thanks to projectgus for the sys.implementation tip

if type(freq()) is int:

f_mhz = freq() // 1_000_000

else:

# STM32 has freq return a tuple

f_mhz = freq()[0] // 1_000_000

sys_id = (

implementation.name,

".".join([str(x) for x in implementation.version]).rstrip(

"."

), # version

implementation._machine.split()[-1], # processor

"%d MHz" % (f_mhz), # frequency

"%d*%d; %d" % (WIDTH, HEIGHT, maxit), # run parameters

)

p = Pin(startpin, Pin.IN)

# displays I have are yellow/blue, have no pull-up resistors

# and have a confusing I2C address on the silkscreen

oled = SSD1306_I2C(WIDTH, HEIGHT, i2c)

oled.contrast(31)

oled.fill(0)

# display system info

ypos = (HEIGHT - TEXTSIZE * len(sys_id)) // 2

for s in sys_id:

ts = s[: WIDTH // TEXTSIZE]

xpos = (WIDTH - TEXTSIZE * len(ts)) // 2

oled.text(ts, xpos, ypos)

ypos = ypos + TEXTSIZE

oled.show()

while p.value() == 0:

# wait for button press

idle()

oled.fill(0)

oled.show()

start = ticks_ms()

# NB: oled.pixel() is *slow*, so only refresh once per row

for y in range(HEIGHT):

# complex range reversed because display axes wrong way up

cc = valmap(float(y + 1), 1.0, float(HEIGHT), 1.2, -1.2)

for x in range(WIDTH):

cr = valmap(float(x + 1), 1.0, float(WIDTH), -2.8, 2.0)

# can't use complex type as small boards don't have it dammit)

zr = 0.0

zc = 0.0

for k in range(maxit):

t = zr

zr = zr * zr - zc * zc + cr

zc = 2 * t * zc + cc

if zr * zr + zc * zc > 4.0:

oled.pixel(x, y, k % 2) # set pixel if escaped

break

oled.show()

elapsed = ticks_diff(ticks_ms(), start) / 1000

elapsed_str = "%.1f s" % elapsed

# oled.text(" " * len(elapsed_str), 0, HEIGHT - TEXTSIZE)

oled.rect(

0, HEIGHT - TEXTSIZE, TEXTSIZE * len(elapsed_str), TEXTSIZE, 0, True

)

oled.text(elapsed_str, 0, HEIGHT - TEXTSIZE)

oled.show()

# we're done, so clear screen and reset after the button is pressed

while p.value() == 0:

idle()

oled.fill(0)

oled.show()

reset()

(also here: benchmark Mandelbrot set (aka Brooks-Matelski set) on OLED – MicroPython)

I will add more tests as I get to wiring up the boards. I have so many (too many?) MicroPython boards!

Results are here: MicroPython Benchmarks

![A table of the latin characters @, A-Z, [, \, ], ^, _, `, a-z and { in STSong half-width latin, taken from fontforge](https://scruss.com/wordpress/wp-content/uploads/2024/01/Screenshot-from-2024-01-07-11-44-09.png)