overheads in both space and execution time.â€

Imagine you have a programming task that involves parsing and analyzing text. Nothing complicated: maybe just breaking it into tokens. Now imagine the only programming language you had available:

- has no text handling functions at all: you can pack characters into numeric types, but how they are packed and how many you get per type are system dependent;

- allows integers in variables starting with the letters I→N, with A→H and O→Z floating point;

- has IF … THEN but no ELSE, with the preferred form being

IF (expr) neg, zero, pos

where expr is the expression to evaluate, and neg, zero and pos are statement labels to jump to if the evaluation is negative, zero or positive, respectively; - has only enough memory for (linear, non-associative) arrays of a couple of thousand entries;

- disallows recursion completely;

- charges for computing time such that a solo researcher’s work might cost many times their salary in a few weeks.

Sounds impossible, right? But that’s the world described in Colin Day’s book from 1972, Fortran techniques with special reference to non-numerical applications.

The programming language used is USA Standard FORTRAN X3.9 1966, commonly known as Fortran IV after IBM’s naming convention. For all it looks crude today, Fortran was an efficient, sod-the-theory-just-get-the-job-done language that allowed numerical problems to be described as a text program and solved with previously impossible speed. Every computer shipped with some form of Fortran compiler at the time. Day wasn’t alone working within Fortran IV’s text limitations in the early 1970s: the first Unix tools at Bell Labs were written in Fortran IV — that was before they built themselves their own toolchain and invented the segmentation fault.

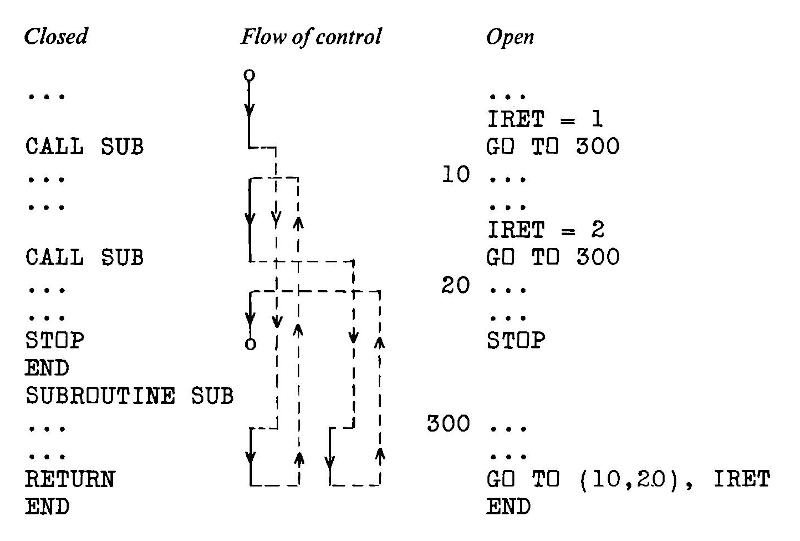

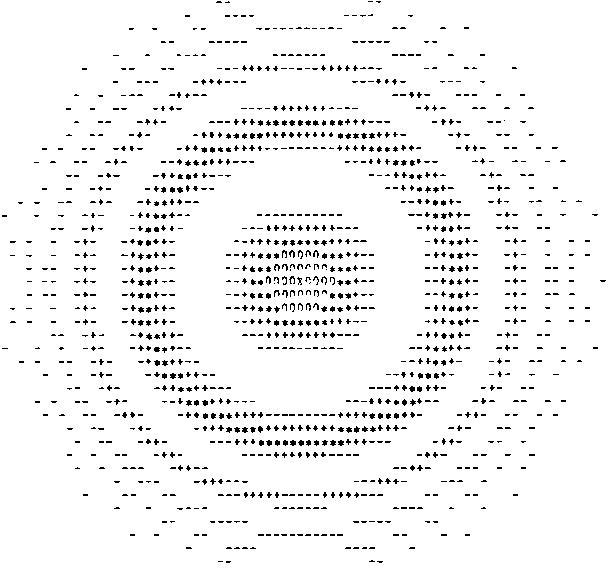

The book is a small (~ 90 page) delight, and is a window into system limitations we might almost find unimaginable. Wanna create a lookup table of a thousand entries? Today it’s a fraction of a thought and microseconds of program time. But nearly fifty years ago, Colin Day described methods of manually creating two small index and target arrays and rolling your own hash functions to store and retrieve stuff. Text? Hollerith constants, mate; that’s yer lot — 6HOH HAI might fit in one computer word if you were running on big iron. Sorting and searching (especially without recursion) are revealed to be the immensely complex subjects they are, all hidden behind today’s one-liner methods. Day shows methods to simulate recursion with arrays standing in for pointer stacks of GO TO targets (:coding_horror_face:). And if it’s graphics you want, that’s what the line printer’s for:

(This image was deemed impressive enough by Cambridge University Press that they used it as the cover of the book. The same function became a bit of a visual cliché, with home computers being able to render it in colour and isometric 3D less than a decade later.)

Why do I like this book enough to track down a used copy, import it, scan it, correct it and upload it to the Internet Archive? To me, it shows the layers we now take for granted, and the privilege we have with these hard problems of half a century ago being trivially soluble on a $10 computer the size of a stick of gum. When we run today’s massive AI models with little interest in the underlying assumptions but a sharp focus on getting the results we want, we do a disservice to the years of R&D that got us here.

The ‘charges for computing time’ comment above is from Colin’s website. Early central computing facilities had the SaaS billing down solid, partly because many mainframes were rented from the vendor and system usage was accounted for in minute detail. Apparently the system Colin used (when a new lecturer) was at another college, and it was the custom to send periodic invoices for CPU time and storage used back to the user’s department. Nowhere on these invoices did it say that these accounts were for information only and were not payable. Not the best way to greet your users.

(Incidentally, if you hate yourself and everyone else around you, you can get a feel of system billing on any Linux system by enabling user quotas. You’ll very likely stop doing this almost immediately as the restrictions and reporting burden seem utterly alien to us today.)

While the book is still very much in copyright, the copy I have sat unread at Lakehead University Library since June 1995; the due date slip’s still pasted in the back. It’s been out of print at Cambridge University Press since May 1987, even if they do have a plaintive/passive aggressive “hey we could totally make an ebook of this if you really want it†link on their site. I — and the lovely folks hosting it at the Internet Archive — have saved them from what’s evidently too much trouble. I won’t even raise an eyebrow if they pull a Nintendo and start selling this scan.

Colossal thanks to Internet Archive for making the book uploading process much easier than I thought it was. They’ve completely revamped the processing behind it, and the fully open-source engine gives great results. As ever, if you assumed you knew how to do it, think again and read the How to upload scanned images to make a book guide. Uploading a zip file of images is much easier than mucking about with weird command-line TIFF and PDF tools. The resulting PDF is about half the size of the optimized scans I uploaded, and it’s nicely tagged with metadata and contains (mostly) searchable text. It took more than an hour to process on the archive’s spectacularly powerful servers, though, so I hate to think what Colin Day’s bill would have been in 1972 for that many CPU cycles … or if even a computer of that time, given enough storage, could complete the project by now.